- Home

- Get Started

- Learn

- Develop

- Oculus Documentation

- Native

- Unity

- Get Started

- Core Development Blocks

- Build Tools

- Package Capabilities

- Metrics

- Additional Tools and SDKs

- Oculus Avatars

- Audio SDK

- Platform Solutions

- Sample Framework

- Best Practices

- Scripting Reference

- Cross-Platform Development

- Release Notes

- Gear VR Development Guide - Legacy

- Documentation Archive

- Unreal Engine

- Oculus Browser

- Oculus for Business

- Developer Tools

- Reference

- Downloads

- Oculus 開發人員政策

- Oculus Documentation

- Distribute

- Support

- Manage

See our new roadmap!

Sign in to see the roadmap and high priority issues we are working on next.

Oculus Go Development

On 6/23/20 Oculus announced plans to sunset Oculus Go. Information about dates and alternatives can be found in the Oculus Go introduction.

Oculus Quest Development

All Oculus Quest developers MUST PASS the concept review prior to gaining publishing access to the Quest Store and additional resources. Submit a concept document for review as early in your Quest application development cycle as possible. For additional information and context, please see Submitting Your App to the Oculus Quest Store.

Viseme Reference

Select a target device

To view this page with device-specific development information, select a target device below.

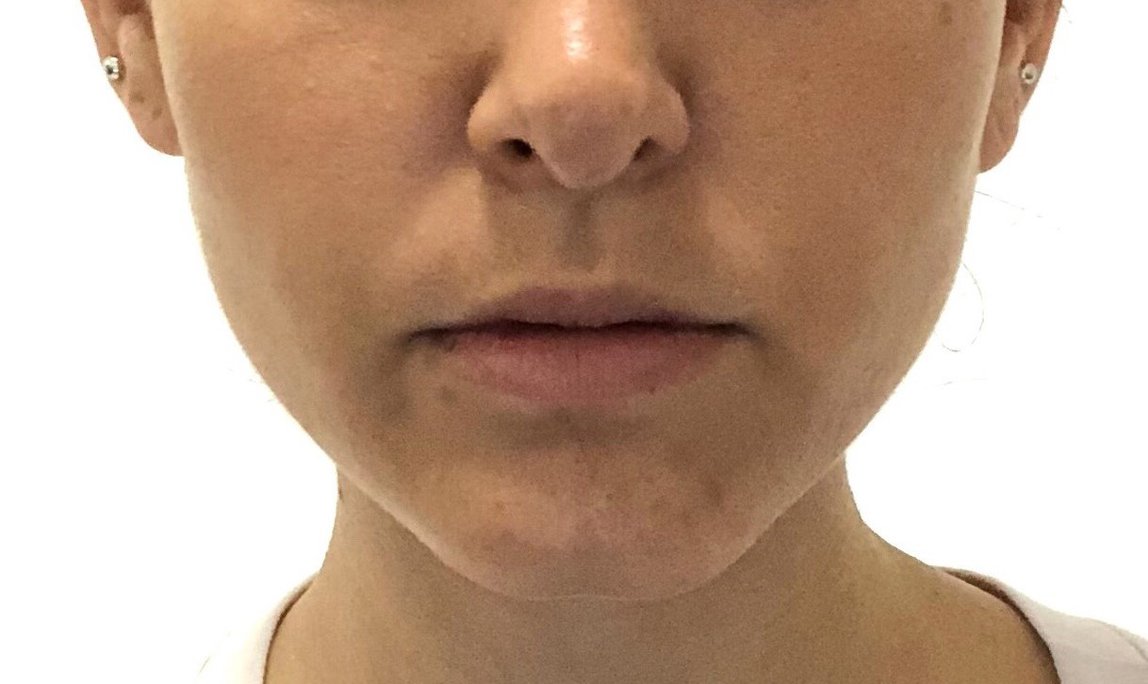

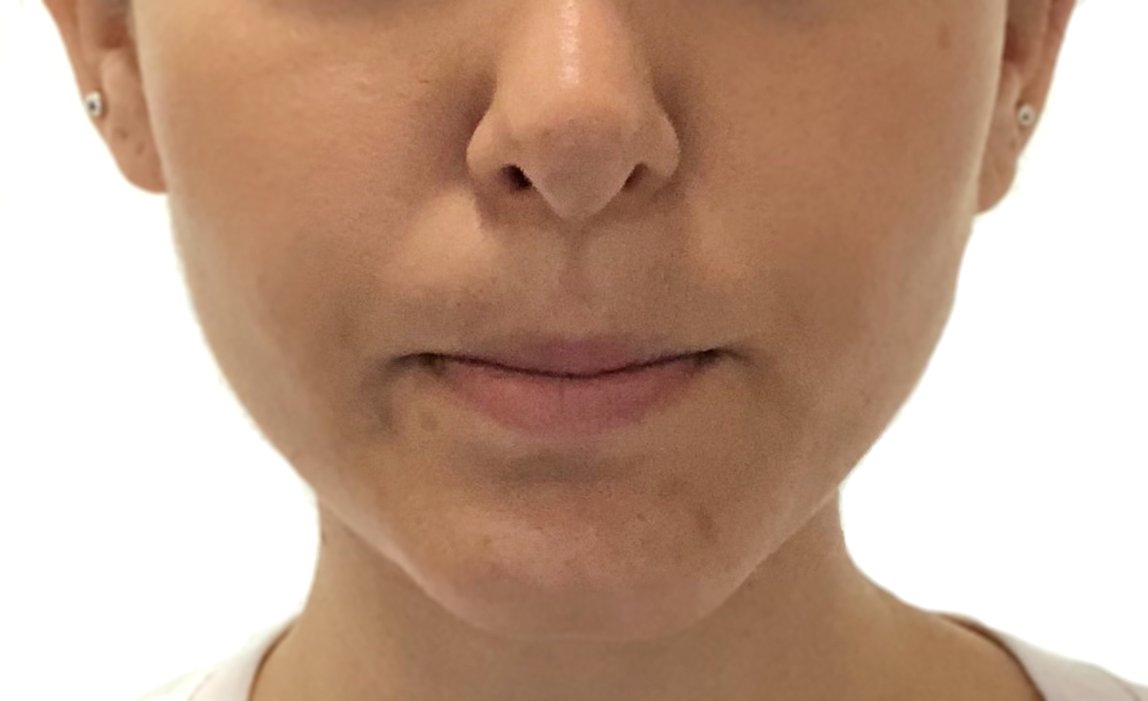

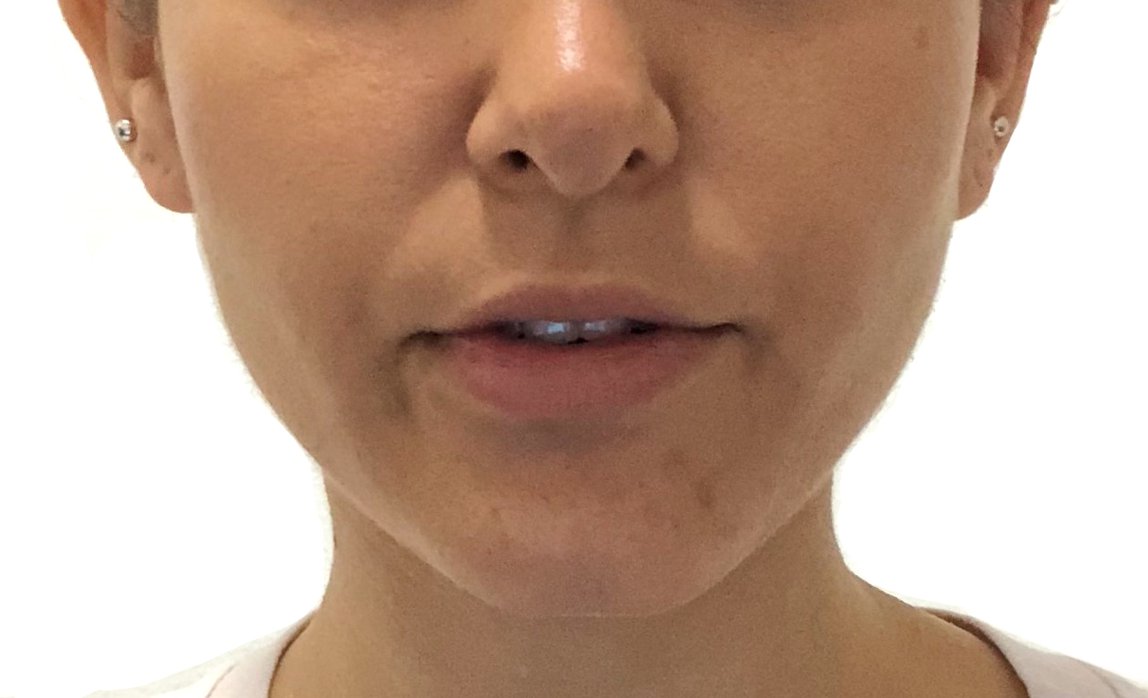

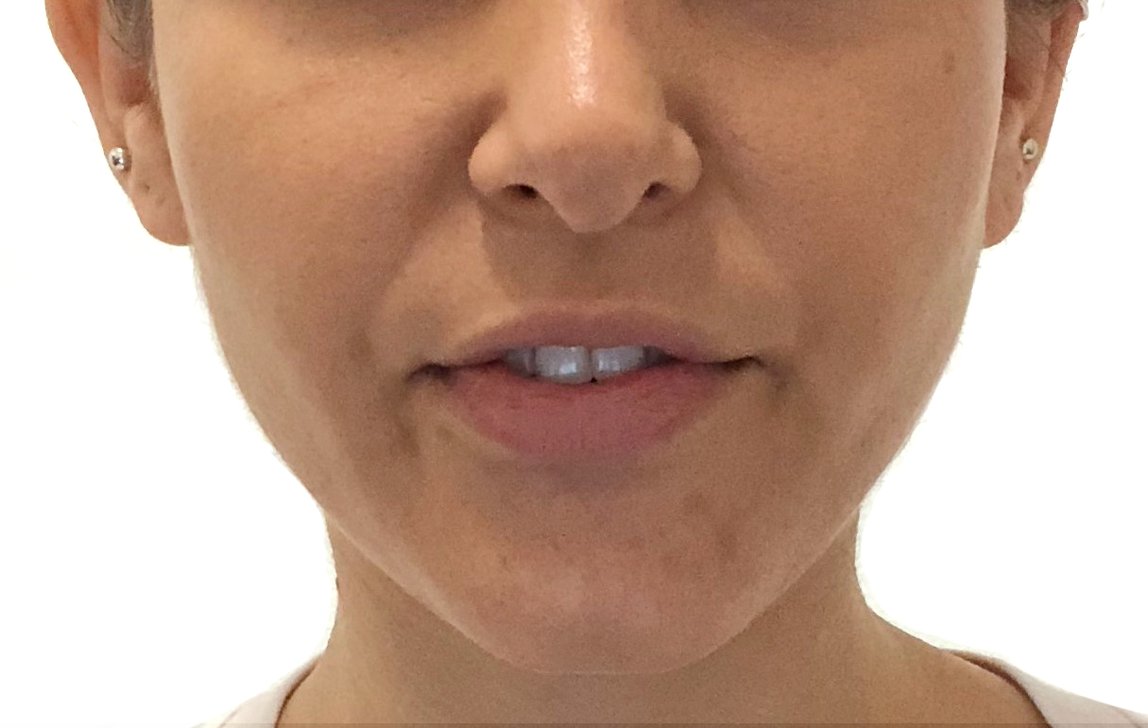

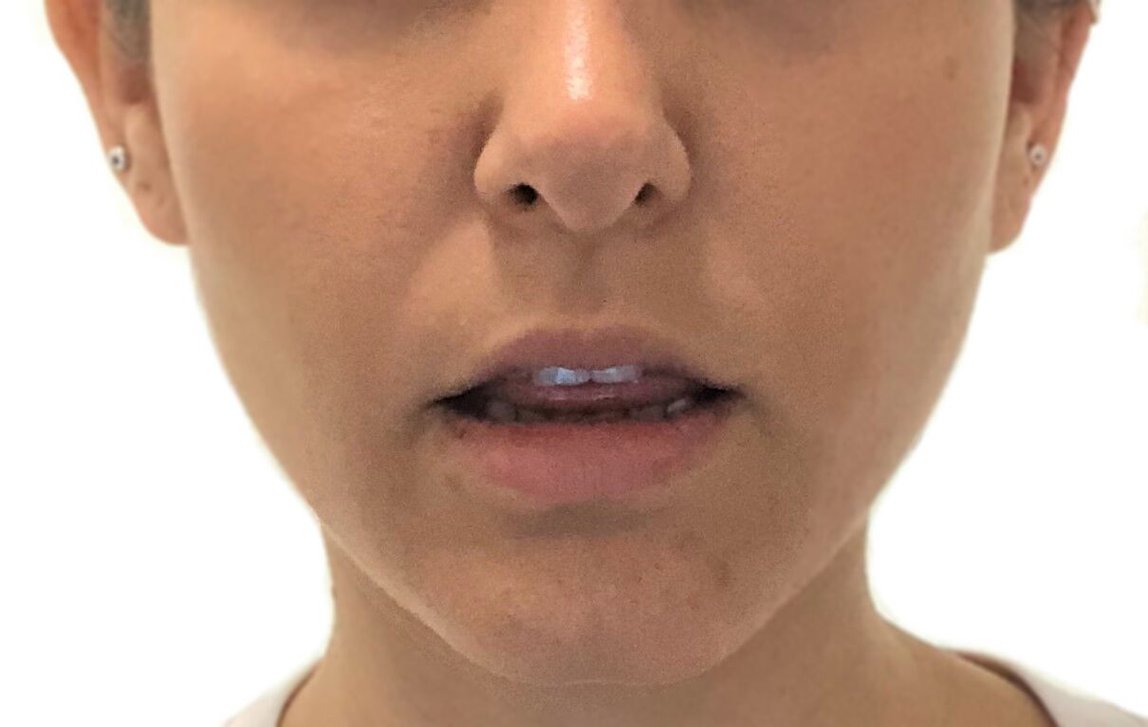

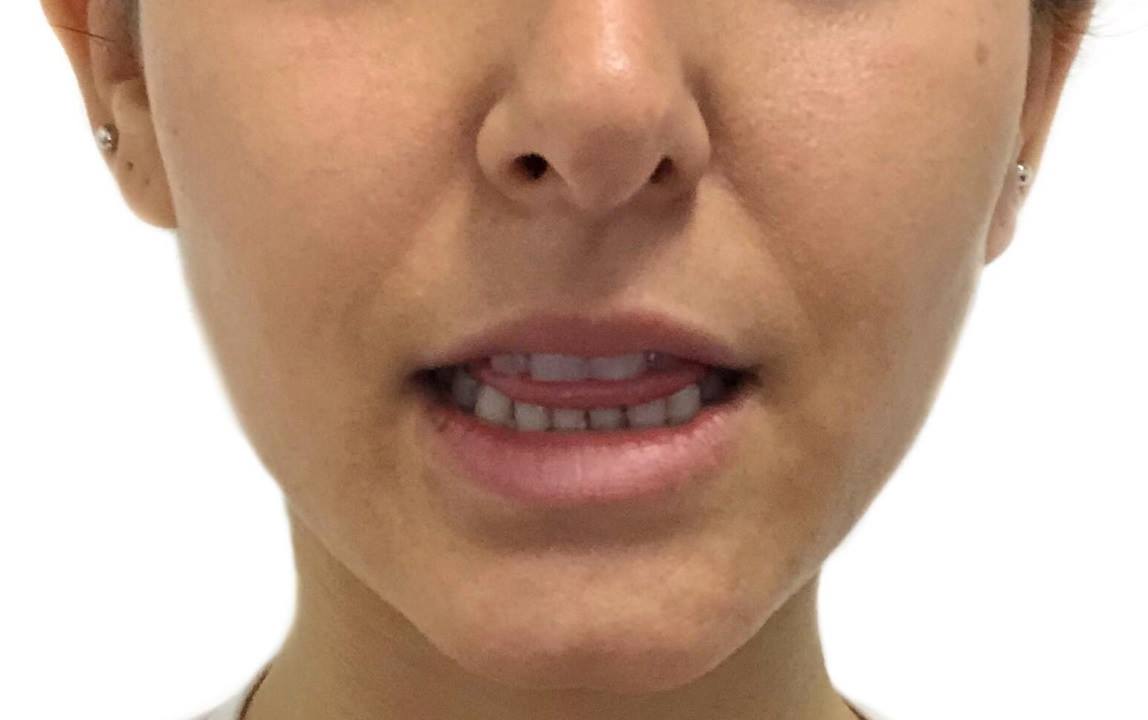

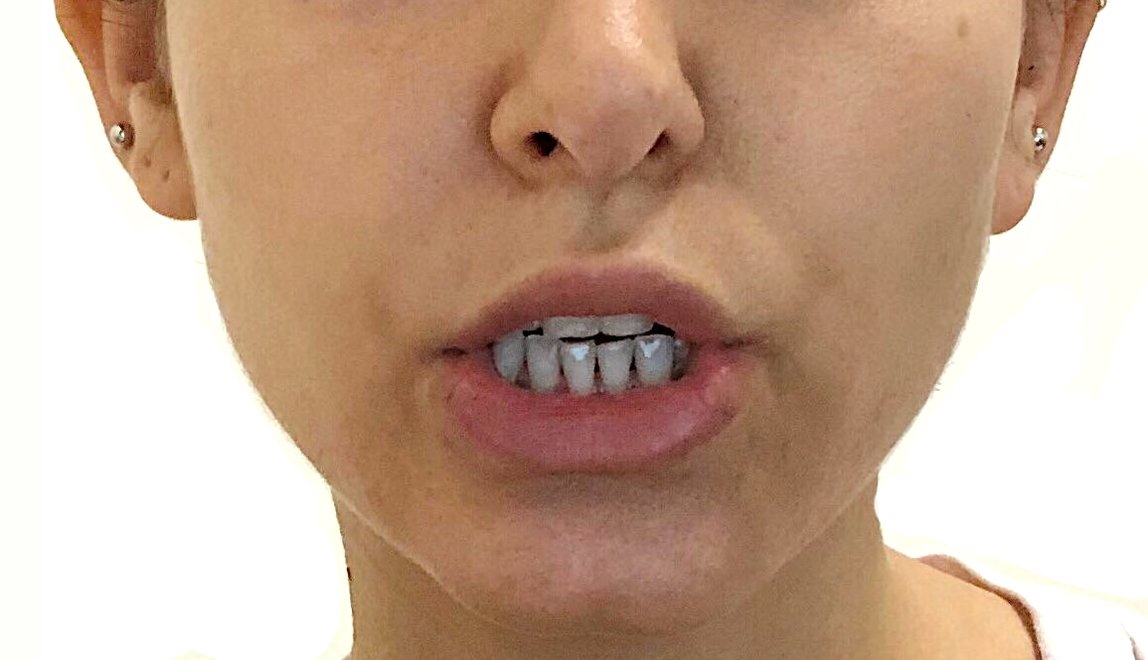

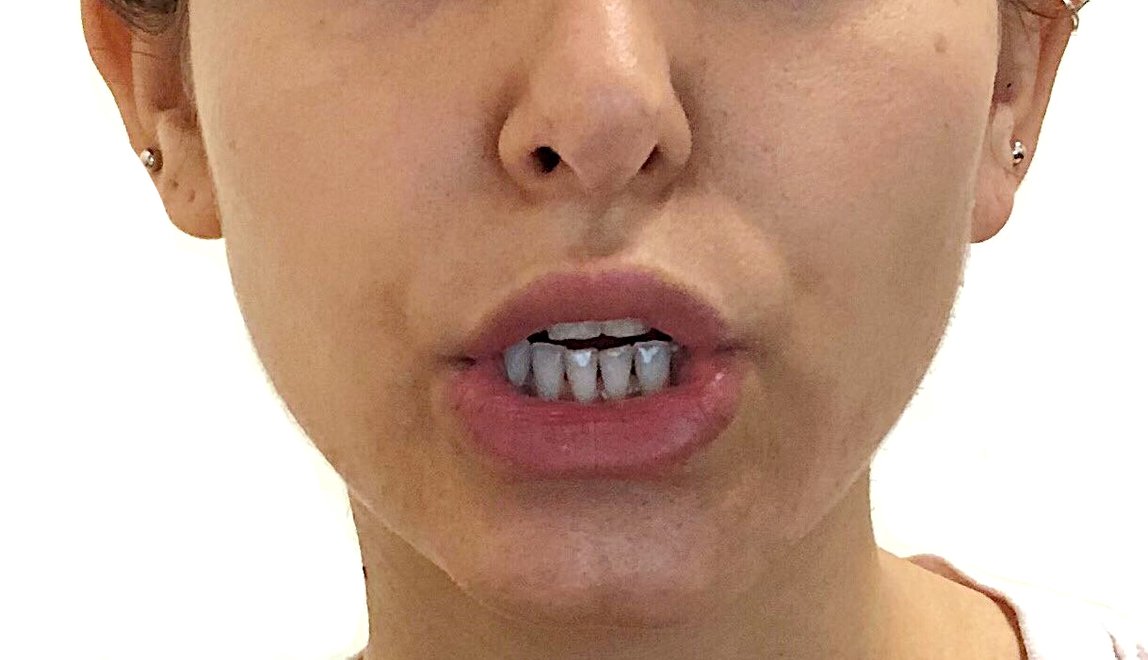

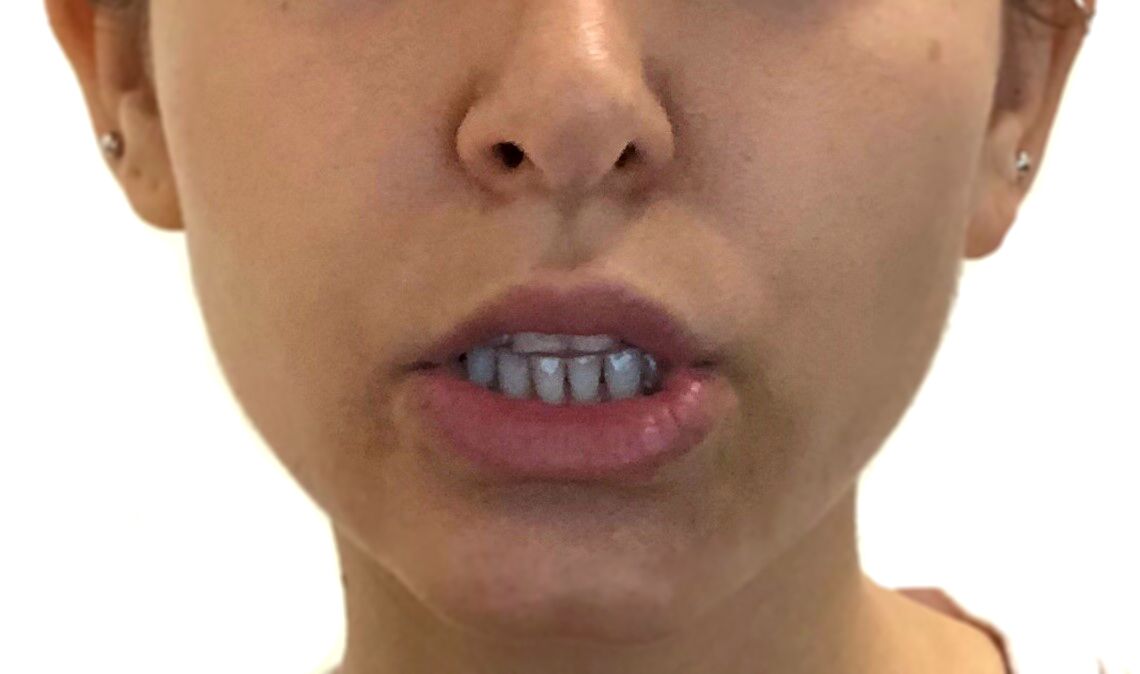

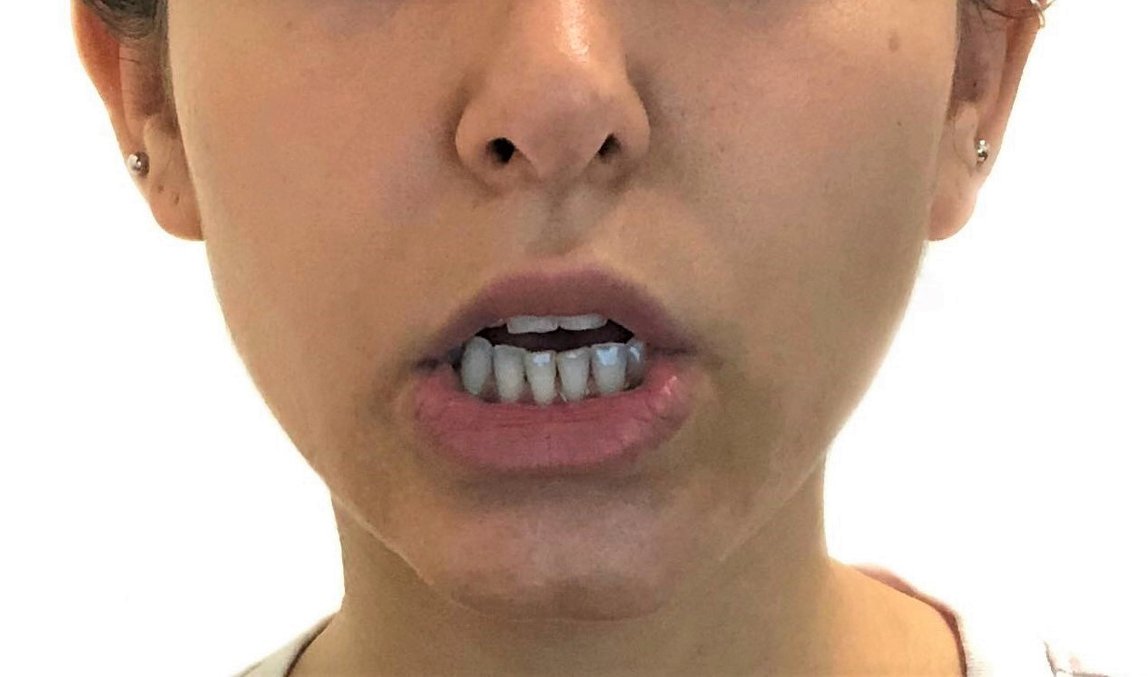

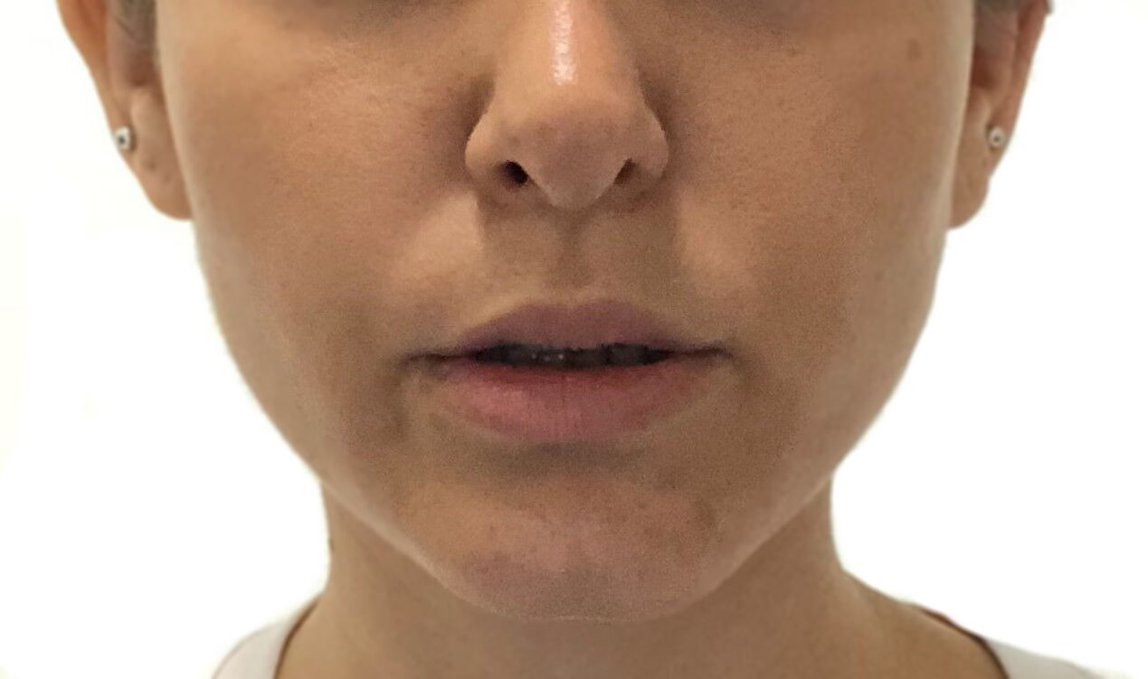

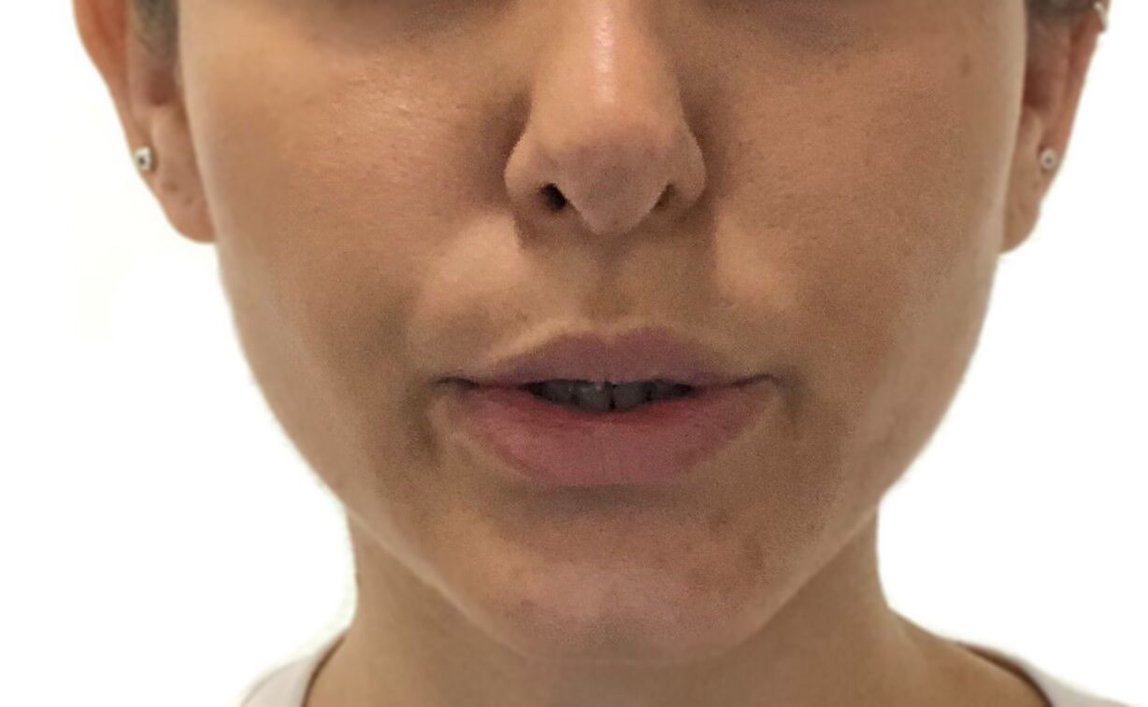

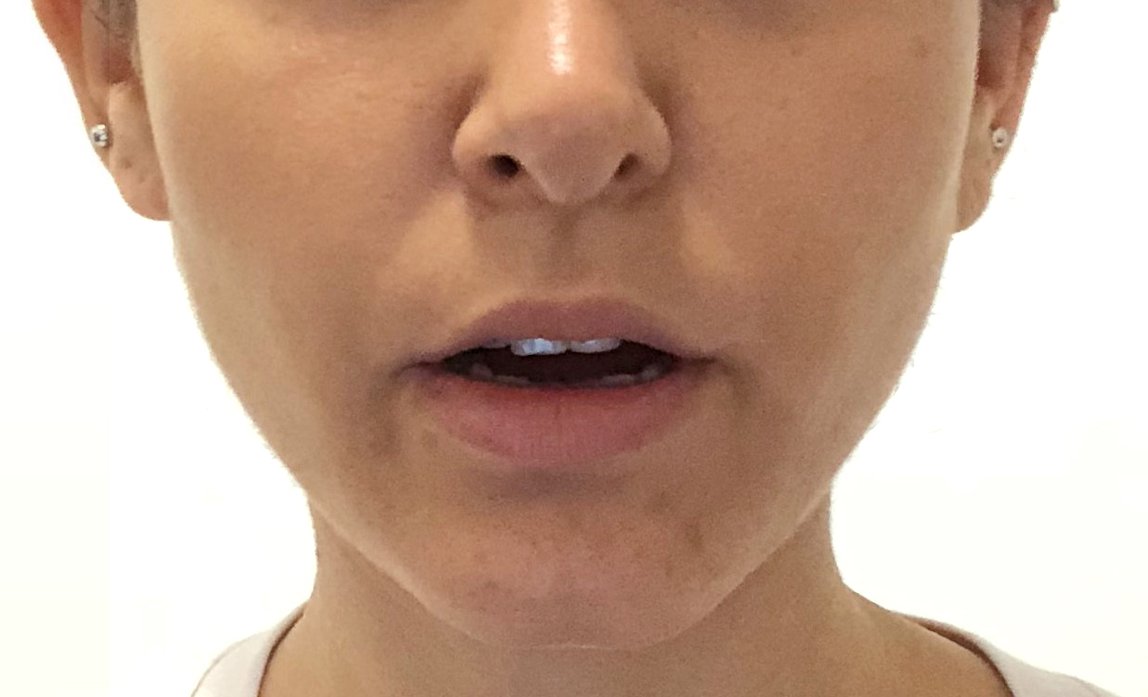

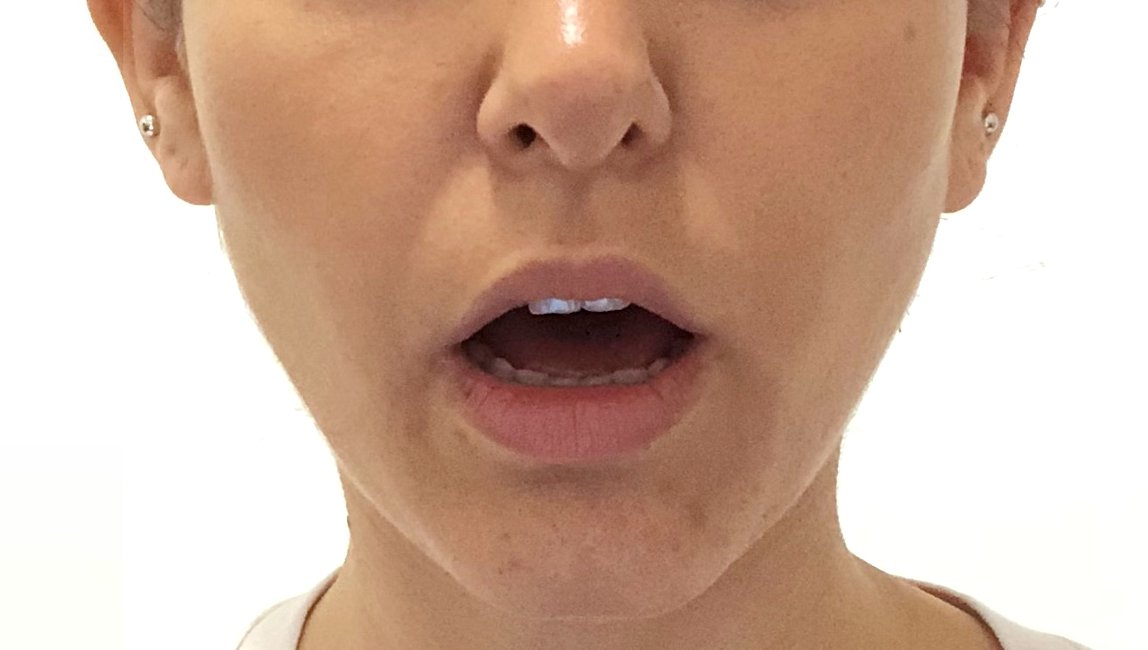

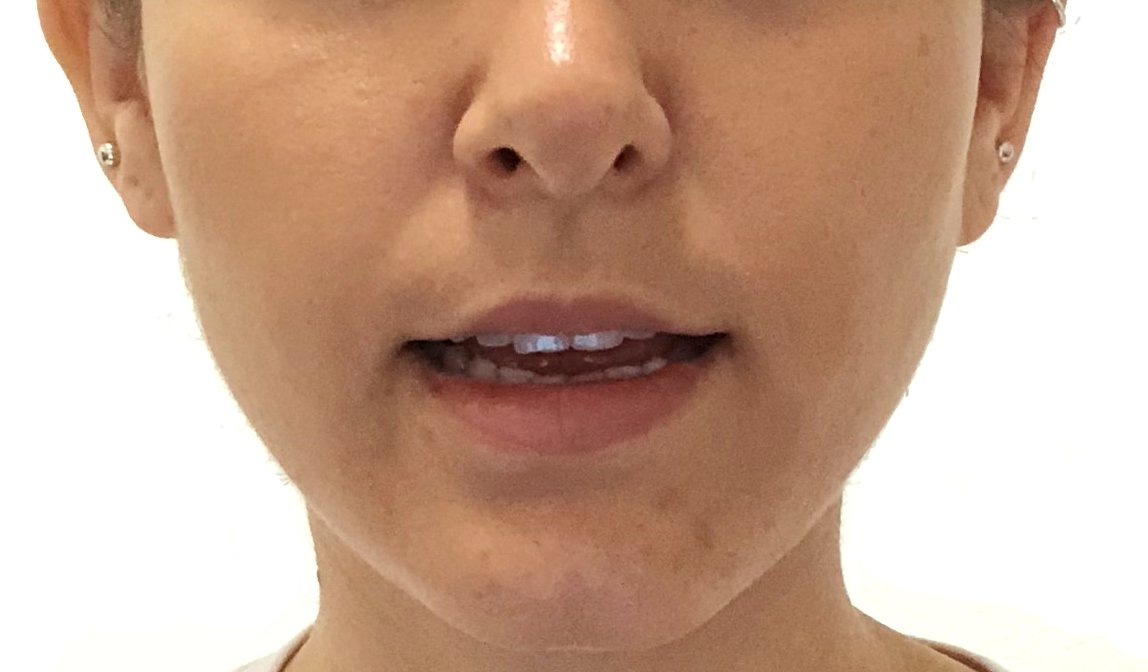

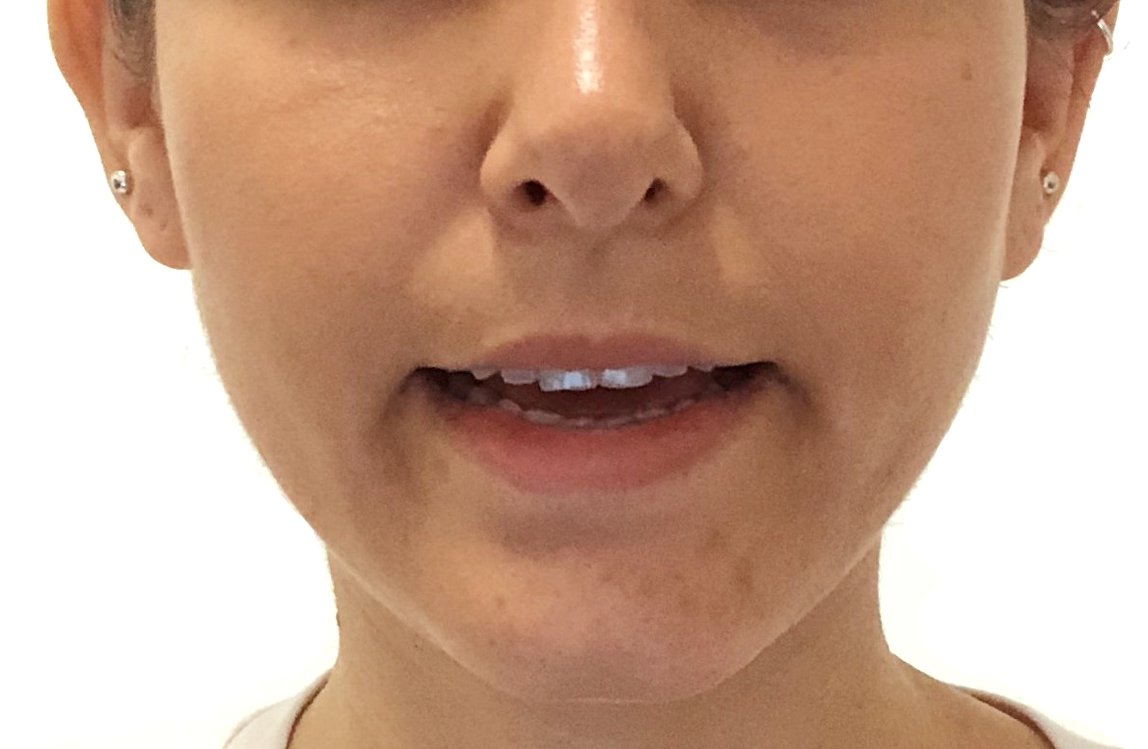

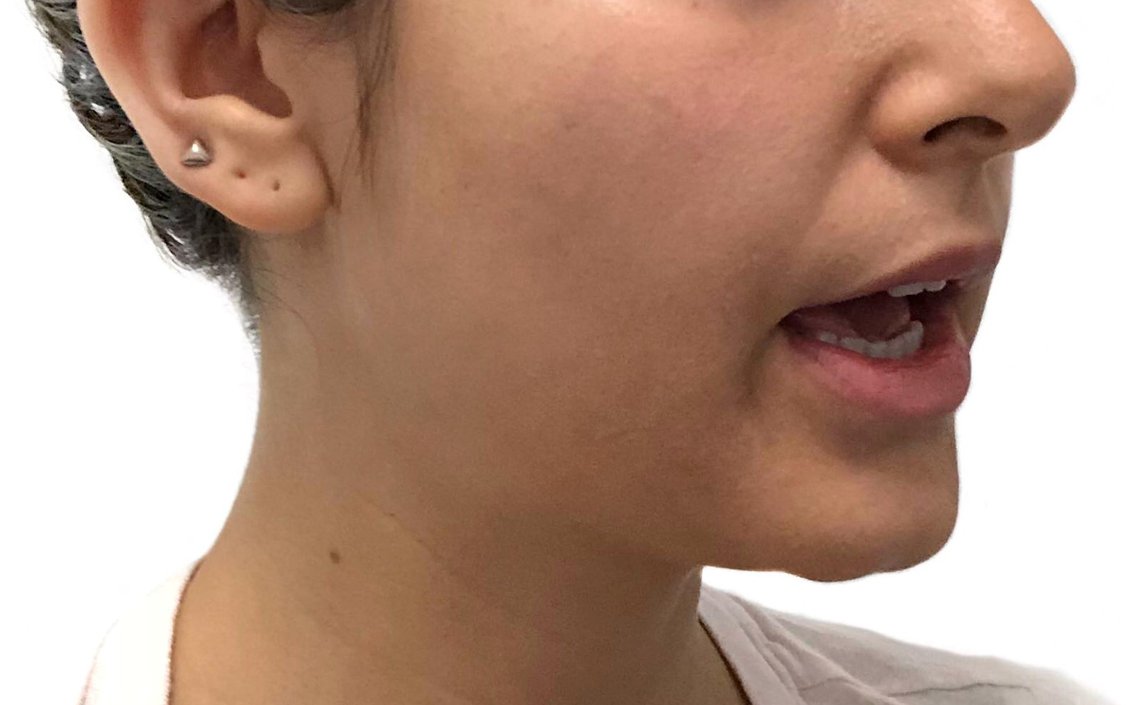

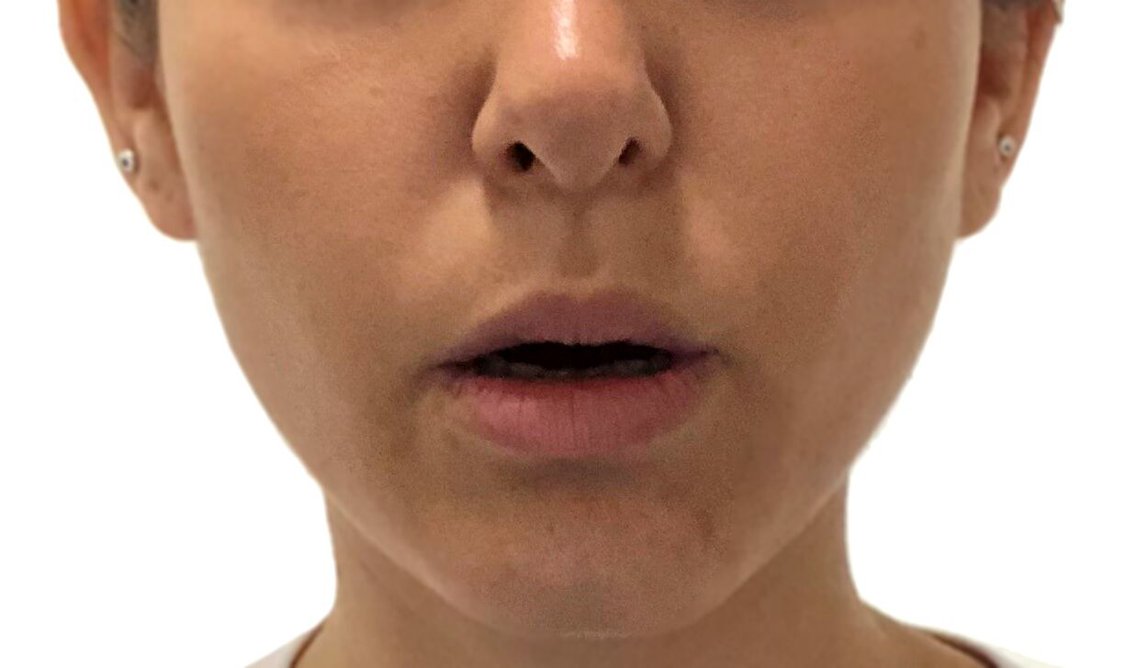

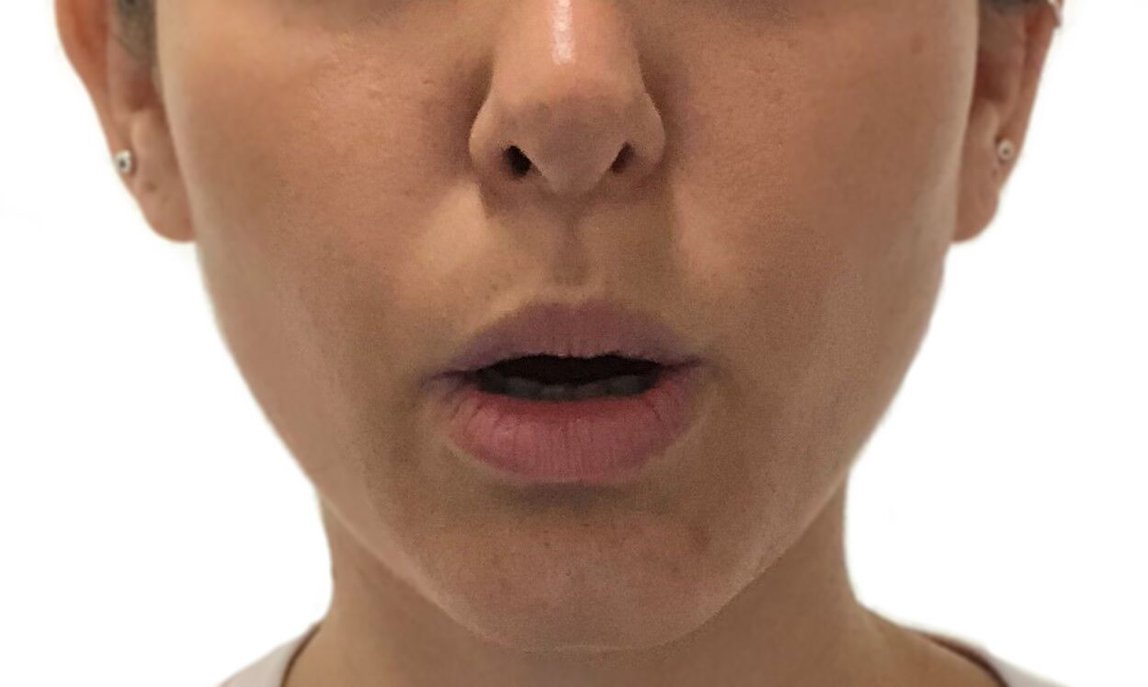

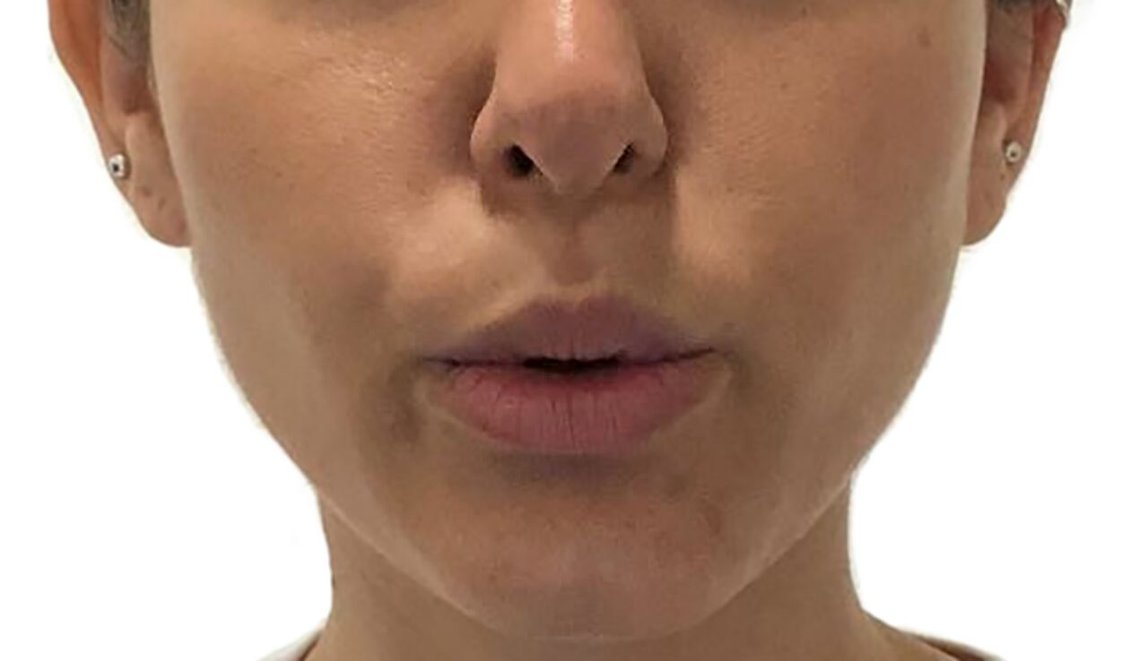

Oculus Lipsync maps human speech to a set of mouth shapes, called “visemes”, which are a visual analog to phonemes. Each viseme depicts the mouth shape for a specific set of phonemes. Over time these visemes are interpolated to simulate natural mouth motion. Below we give the reference images we used to create our own demo shapes. For each row we give the viseme name, example phonemes that map to that viseme, example words, and images showing both mild and emphasized production of that viseme. We hope that you will find these useful in creating your own models. For more information on these 15 visemes and how they were selected, please read the following documentation: Viseme MPEG-4 Standard

Animated example

The animation that follows shows the visemes from the reference image section.

Reference Images

You can click each image to view in larger size. Only a subset of phonemes are shown for each viseme.